|

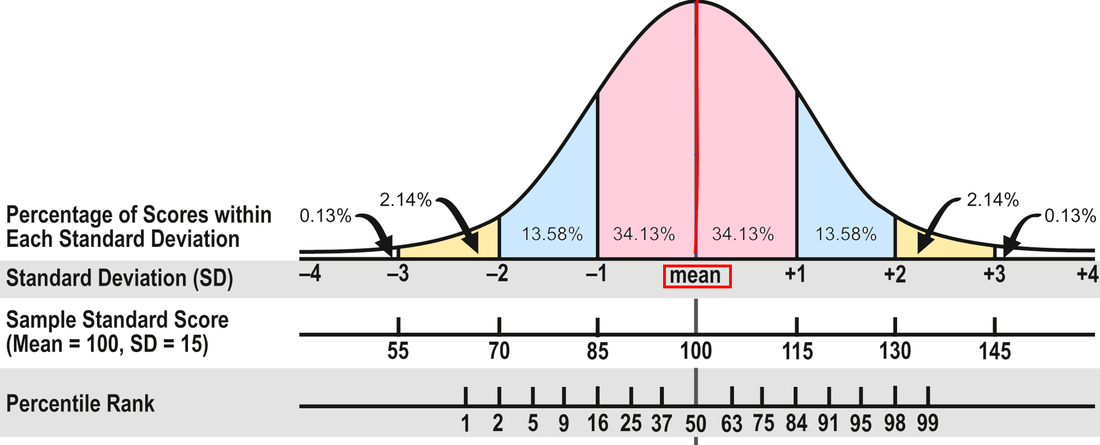

In Part 1, I discuss the different kinds of test scores and what they mean and don't mean. In Part 2, I'll address how to interpret scores--what's considered average, confidence bands, the differences between composite and subtest scores, and so on. The array of test scores in an evaluation report can be confusing. On standardized tests, the number correct is called the raw score. A raw score by itself is meaningless because it’s not the percentage correct; it’s just the number correct, and different tests have a different number of items. So publishers convert the raw scores into derived scores to compare a student’s performance to that of other students his age in the norm group—the people the test was standardized on. There are several kinds of derived scores. Before I discuss a few of them, I need to introduce some statistics. I know this is technical, but bear with me because it will help in the end! Most psychological and educational test results fall within the normal or bell shaped curve. The normal curve is divided into standard deviations that measure the distance from the mean (the average score). In the diagram below, you can see that about 68 percent of the population will have scores between plus and minus one standard deviation (pink area). An additional 27 percent will have scores between plus/minus two standard deviations (about 95 percent; pink and blue areas). And 4 percent more will have scores between plus/minus three standard deviations (about 99 percent; pink, blue, and yellow areas). Now pat yourself on the back for getting through this section!

0 Comments

Leave a Reply. |

AuthorDr. Andrea Winokur Kotula is an educational consultant for families, advocates, attorneys, schools, and hospitals. She has conducted hundreds of comprehensive educational evaluations for children, adolescents, and adults. |

The intention of this website is to provide useful information but not legal advice. Every case is different. Please consult a special education attorney for legal advice.

Professional Associations

|

|